Available online at: https://doi.org/10.18778/1898-6773.87.1.06

https://orcid.org/0009-0001-1333-5198

https://orcid.org/0009-0001-1333-5198

Department of Philosophy and History of Science, Faculty of Science, Charles University

https://orcid.org/0000-0002-3277-8365

https://orcid.org/0000-0002-3277-8365

Department of Philosophy and History of Science, Faculty of Science, Charles University

ABSTRACT: Some recent studies suggest that artificial intelligence can create realistic human faces subjectively unrecognizable from faces of real people. We have compared static facial photographs of 197 real men with a sample of 200 male faces generated by artificial intelligence to test whether they converge in basic morphological characteristic such as shape variation and bilateral asymmetry. Both datasets depicted standardized faces of European men with a neutral expression. Then we used geometric morphometrics to investigate their facial morphology and calculate the measures of shape variation and asymmetry. We found that the natural faces of real individuals were more variable in their facial shape than the artificially generated faces were. Moreover, the artificially synthesized faces showed lower levels of facial asymmetry than the control group. Despite the rapid development of generative adversarial networks, natural faces are thus still statistically distinguishable from the artificial ones by objective measurements. We recommend the researchers in face perception, that aim to use artificially generated faces as ecologically valid stimuli, to check whether their stimuli morphological variance is comparable with that of natural faces in a target population.

KEY WORDS: geometric morphometrics, GAN, artificial intelligence, human face, morphology, symmetry.

In recent years, internet users have become increasingly familiar with online content synthesized by artificial intelligence (AI). AI engines write songs, create art pieces, poems, or love letters (Cetinic and She 2022). Many online services that use content-generating AIs have become widely popular. People are getting used to the idea that independent creativity, once believed to be an exclusive domain of humans, can be ‘extracted’ from the human brain and replicated by well-trained, hierarchically organized pieces of code.

This is unlikely to be the final stop on the highway of human-to-AI devolution. Numerous industries keenly took up the new opportunities. AI-generated content is becoming popular in media and entertainment, in marketing, advertising, cinema production, retail, and the list could go on. The potential of a special type of AI engines, so-called generative adversarial networks (GAN), is in high demand in 3D modelling, and it can generate images of living entities nearly indistinguishable from real ones (Anantrasirichai and Bull 2022).

Recent generations of GAN have shown remarkable progress in replicating human morphometric features and generating realistic images of faces and bodies – both in 2D and 3D – of people who never existed but look amazingly life-like (Anantrasirichai and Bull 2022). This phenomenon raises a number of ethical questions, mostly related to the use of deep fakes (Mustak et al. 2023), which also leads to increased investment in cybersecurity industry and related fields whose aim is to protect individuals against nonconsensual use of their private biometric data and the public against disinformation (Pasquini et al. 2023; Wong 2022).

In February 2022, the media widely reported about a University of Texas study (Nightingale and Farid 2022) which suggested that synthesis engines have now left the “uncanny valley,” meaning they are past the point where robots that look almost but not completely human-like evoke in people a negative emotional response (Geller 2008). The Nightingale and Farid study suggested that AI engines are now capable of creating fictional faces which are not only indistinguishable from, but even more trustworthy than the faces of real people. People are thus not only prone to perceive the artificial faces as real ones, but they also considered artificial faces more trustworthy-looking, i.e., having facial features that inspire confidence. This research was based on a survey where people evaluated faces and marked them as either real or artificially created. We have noticed some peculiarities in the approach to compiling the dataset of natural and AI-generated faces in this survey: the AI-created faces looked more smiley and generally more friendly than the natural ones did, which created a potential for bias in the judgements. Still, several other studies provided further support to the claims made by Nightingale and Farid (Sergi D Bray, Johnson, and Kleinberg 2023; Lago et al. 2022; Rossi et al. 2022; Tucciarelli et al. 2022).

More recent study also claims that AI-generated faces are now indistinguishable from human faces, however it points the possible limitation (Miller et al. 2023). As far as modern AI engines are trained disproportionally on photos of individuals with a white skin, generated white AI faces may look especially realistic. The authors (Miller et al. 2023) proposed a term AI Hyperrealism to describe the discovered effect when white AI faces are judged as human more often than real human faces.

On the other hand, there are several papers indicating that people still do not perceive AI-generated faces as trustworthy (Liefooghe et al. 2023). One of the studies (Moshel et al. 2022) investigated how human brain processed artificially generated images based on brain imaging data. It shows that AI generated faces may be reliably recognized using people’s neural activity, and peoples’ subjective judgement do not always correlate with these data, as far as they perform near chance classifying real faces and realistic fakes. These findings may illustrate the fact that we still cannot state AI generated faces to be indistinguishable from real ones. The studies by Nightingale and Farid 2022, Miller et al. 2003, and some others focused mainly on an evaluation of the photorealistic qualities of artificially synthesized faces as well as respondents’ subjective perceptions and judgements. In contrast, our objective here is to assess the degree to which real human faces and those generated by AI techniques are comparable in terms of objectively quantifiable parameters of facial morphology. To do so, we explore an alternative way of assessing differences between artificial and real faces, a method based on geometric morphometrics.

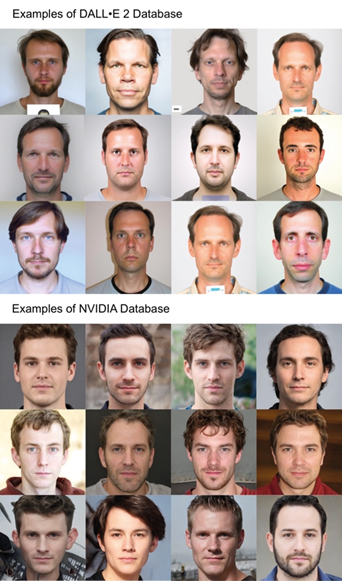

For the purposes of our study, we generated research objects using two prominent AI engines: Open AI’s DALL·E 2 (Marcus, Davis, and Aaronson 2022) (henceforth DAL) and StyleGAN2 (henceforth NVID) developed by the NVIDIA team (Karras, Laine, and Aila 2019). DAL is a comprehensive network that is becoming extremely popular in the artistic community for its ability to create realistic images and arresting art pieces based on a description in natural language. The former GAN literally allows the artist to be the master of AI by providing it with detailed instructions how to create, edit, or adjust images. The latter is a project of NVID, a company whose main product are graphic processors and systems-on-chip. It allows users to generate extremely realistic yet totally fake portraits of people, down to details as minute as a realistic simulation of skin texture.

Despite the existence of various facial databases (e.g., 16–19), the acquisition of facial portraits from various understudied local populations may still be organizationally and timely demanding. The obvious advantage of artificial stimuli is also that they are just avatars and do not represent real human beings which may save time to researchers asking for approval to institutional review boards to work with human subject as well as taking off burden from ethical committees. AI faces could thus be well used for psychological research as a substitute for real human faces. One can thus easily imagine their implementation in research dealing with the relationship between facial appearance and associated personality factors. However, if one decides to use artificial stimuli, the crucial question is their ecological validity for perceivers from a target population.

In this study, we wanted to investigate whether the mean morphometric features – including symmetry and shape variance, here measured as morphological disparity – of AI-generated faces are the same as those of natural faces. Unlike several previous studies (Bray, Johnson, and Kleinberg 2023; Lago et al. 2022; Nightingale and Farid 2022; Rossi et al. 2022; Tucciarelli et al. 2022), we used standardized synthetized faces with a neutral expression and compared them to natural faces selected from our database of standardized facial portraits. Recent studies have shown that humans are no longer able to distinguish artificially generated facial stimuli from portrait photographs of real human beings. We therefore hypothesize that comparison of morphometric features measured directly from the faces should corroborate these recent findings.

As a control group of natural faces, we used 197 male facial portraits (mean age ± SD = 26.62 ± 8.81) of European (Czech) origin, which we had used in our previous studies (Kleisner 2021; Kleisner, Pokorný, and Saribay 2019; Linke, Saribay, and Kleisner 2016). With the sample of natural faces, we wanted to cover a representative age range (from 19 to 59) that would approximately correspond to the range covered by the faces generated by GANs. Of course, the age of artificial faces cannot be objectively determined: it can only be approximately assessed based on the presented facial image, which is why the notion of age range in AI-generated faces must be taken with a grain of salt.

DAL has an intuitive user interface for artists hosted on playgroundai.com, where it comes in a drop list along with another AI model. We used the version current as of December 2022. The query for the present study was “ID photo of a European man, enface.” This led to the generation of dozens of faces comparable with the face settings in our database of standardized natural facial portraits with a neutral expression. In total, the DAL generated for us around two hundred facial portraits, some examples are provided in Figure 1.

Fig. 1. Examples of AI-generated faces

During data preparation, several images from the 200 generated by the DAL were excluded due to excessively cropped facial parts, such as the chin or the forehead. Then we chose 100 images for the purpose of the present study.

The NVID AI-engine in a public version for December 2022 also functions in conjunction with a basic user interface hosted on thispersondoesnotexist.com. This AI engine has no filtering options for age, biological gender, skin color, or any other criteria, but it generates faces that look astonishingly natural, including detailed skin features. For the morphometric purposes of the current study one hundred synthesized portraits with neutral emotional expression and appropriate face settings were selected.

We excluded AI-generated faces (of both DAL and NVID origin) whose apparent age and emotional expression fell outside the range of the control dataset: our aim was to make sure that the AI-generated and natural faces datasets do not excessively differ in their apparent age range and emotional display. This was done by a panel of six persons, and only AI-generated faces that fell within the range defined by the set of real faces were kept for further analyses.

This study was performed in line with the principles of the Declaration of Helsinki. All procedures mentioned and followed were approved by the Institutional Review Board of the Faculty of Science of Charles University (protocol ref. number 2023/06). All photographed individuals gave informed written consent to use their portraits in scientific research. This particular study does not include information or images that could lead to the identification of any real person, because we do not use original photographs of real human beings but only shape coordinates defined on the faces that were already published within our previous works, e.g. (Kleisner et al. 2021, 2023).

Facial morphology was characterized by 72 landmarks, including 36 semilandmarks that denote curved features and outlines. Shape coordinates of all 397 facial configurations were entered into a Generalized Procrustes Analysis (GPA) using the gpagen() function in the Geomorph package in R (Adams and Otárola-Castillo 2013; Schlager 2017). Procrustes-aligned configurations were projected into a tangent space. Semilandmark positions were optimized based on minimizing the bending energy between corresponding points. See supplementary figure S1 for the visualization of the principle component analysis (PCA) on Procrustes residuals, i.e., aligned shape coordinated after Procrustes fit.

Based on symmetrized Procrustes residuals of facial configurations, morphological disparity was calculated separately for the group of artificial and natural faces using the morphol.disparity () function within the geomorph R package.

Procrustes residuals were first laterally reflected along the midline axis. The corresponding paired landmarks on the left and right sides of faces were then relabeled, and the numeric labels of landmarks on the left side swapped for the landmark labels from the right side and vice versa. To measure asymmetry, we calculated Procrustes distances between the original and the mirrored (reflected and relabeled) configuration, whereby larger values indicated greater facial asymmetry.

To test the difference between the AI-generated and control group means, we conducted a Procrustes ANOVA with Procrustes residuals as a response variable and group factor as an independent variable. The analysis was done by procD.lm () function in the geomorph R package (Adams et al. 2023; Baken et al. 2021). The effect size was assessed as adjusted coefficient of determination (R2). To test the difference in the levels of facial asymmetry between the AI and CTRL groups, we used a nonparametric Kruskal-Wallis test test based on Procrustes distances between the left and right side of the face. P-values for testing the group differences in morphological disparity were estimated by permutation test (based on 10,000 iterations) using morphol.disparity () function within the geomorph R package.

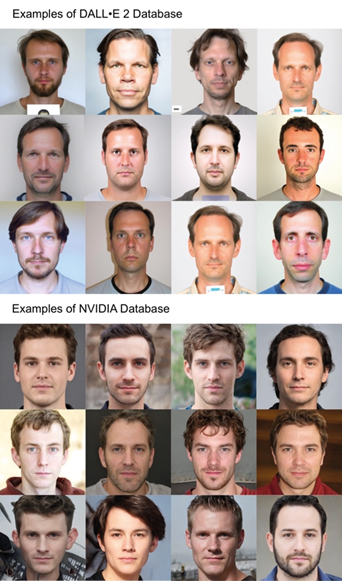

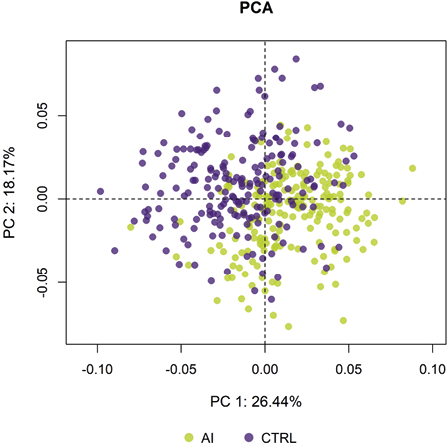

The artificial faces were statistically different from the natural faces in terms of facial morphology (F1,395 = 44.588, p < 0.001, R2 = 0.101); see also Figure 2 for a two-group PCA plot and supplementary figure S1 for a PCA plot with separate visualization of DAL and NVID-generated faces.

Fig. 2. A visualization of the principal component analysis (PCA) on Procrustes residuals showing the first (PC1) and second (PC2) principal component, which together explained 44.6% of overall shape variation. Dots depict individual faces shown in different colors based on whether they were AI- generated or natural, i.e., from the control group (CTRL)

The morphological disparity of the control group (MDCTRL = 0.00396) was significantly higher (p < 0.001) than the morphological disparity of AI-generated faces (MDAI = 0.00289), indicating that the facial shape of real individuals is more variable than the shape of AI-generated faces. When images generated by the DAL (MDDALL = 0.00143) and the NVID (MDNVID = 0.00173) were compared with natural faces separately, it turned out that both groups of artificial faces significantly differ (p < 0.001) in the level of morphological disparity from the control group. On the other hand, the DAL and NVID faces did not mutually significantly differ in the level of morphological disparity (p = 0.24).

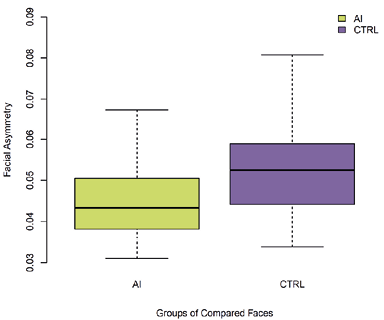

The AI-generated faces also showed lower levels of facial asymmetry than the control group (ΔmeanCTRL–AI = 0.007, W = 12446, p < 0.001); see Figure 3. This held also when all three groups were compared separately (Kruskal-Wallis chi-squared = 49.512, df = 2, p < 0.001), see also supplementary Figure S2).

Fig. 3. Boxplots comparing the distribution of the asymmetry scores of faces generated by AI and control group of natural faces (CTRL). Thick solid lines between within the boxes indicate group medians. Whiskers denote the upper and lower quartiles

Using geometric morphometrics based on faces with a neutral expression, we showed that AI-generated faces statistically differ from natural faces in terms of their facial shape. Moreover, AI-synthetized faces are less variable in facial shape, i.e., show lower morphological disparity, and have lower levels of facial asymmetry that natural faces do. From the perspective of objectively quantifiable morphometric measurements, artificial and natural faces are still distinguishable, although people cannot see these differences (Bray et al. 2023; Lago et al. 2022; Nightingale and Farid 2022; Rossi et al. 2022; Tucciarelli et al. 2022).

Our finding of a greater shape variation of natural faces compared to artificial ones seems to support the findings of a perception study by Nightingale and Farid (Nightingale and Farid 2022). The lower shape variation of AI-generated faces points to their higher levels of averageness and since objects closer to the average are more common, people are likely to perceive them as more typical and therefore more natural (and more ‘real’). In other words, natural faces are more variable, which also implies that in a sample of natural faces one finds more distinctive faces than in an AI-generated sample – and because distinctive faces are encountered less frequently, they are perceived as less natural, and therefore also less trustworthy (Sofer et al. 2015).

This leads us back to the “uncanny valley” phenomenon (Geller 2008). During the evolution of robotics, this term was used to describe a hypothesized relationship between a machine’s appearance and the emotional response it evokes. It has been speculated that a robot’s appearance and movements, which are somewhere in-between “somewhat human” and “fully human,” make people feel apprehensive and insecure. Once, however, a robot or another synthetic object becomes sufficiently human-like, the “uncanny valley” ends.

Our results show that AI-generated human faces have by now emerged from the uncanny valley not just because GANs make them perfectly realistic, but in part also due to quite the opposite. Artificial faces are perceived as more trustworthy also because they show a lower variation and thus higher levels of averageness (lower distinctiveness) than natural faces do. Human-like generated images are in increasing demand in marketing communication, mass media, social media, and entertainment industry. Therefore, these results could motivate the developers of advanced AI engines for algorithms improvement and teach their machines create more natural looking content.

At the same time, our study has several limitations. First, we based our analysis solely on male faces. This was driven chiefly by the fact that female faces generated by GANs – especially those produced by the NVID platform – almost always smile. Therefore, we could not easily compare them with our sample of natural faces with a neutral expression. Another limitation is that we used only faces of European origin: future morphometric studies may improve on this by including different ethnicities and genders, as was in fact done in Nightingale and Farid’s perception study (Nightingale and Farid 2022). Nevertheless, using Czech faces instead of faces of various European origins for comparison with artificially generated faces provides a more rigorous test of the observed phenomenon regarding the smaller shape variation of artificial faces. The Czech population is relatively homogeneous and potentially less variable compared to the broader European population. If we were to use a more diverse European sample, the difference in morphological disparity between the control group and artificial faces would be even more pronounced. Similarly, this logic applies to the seemingly wider age range of artificial faces. A greater age range would typically result in higher shape variation. However, the shape variation of artificial faces was lower compared to natural faces.

Experimental studies of facial perception that would in future use artificially generated facial stimuli should first calibrate them to levels of symmetry and overall morphological variation similar to those found in local populations of natural faces in order to ensure an appropriate level of ecological validity. For that purposes researchers may use already existing facial databases with clear geographical information about the origin of photos (Courset et al. 2018; Lakshmi et al. 2021; Ma et al. 2015; Saribay et al. 2018). The final question is whether researchers should use artificially generated faces as fully ecologically valid stimuli in perceptual task-oriented research. Probably yes, but only with great caution. However, one can expect that at least in the case of European faces, the synthetic and natural morphologies will be soon indistinguishable.

Acknowledgments

This study was supported by the Czech Science Foundation, Grant number: GA21-10527S. We wish to thank Anna Pilátová for English proofreading.

Data availability

All data and R code are available at https://osf.io/5p2qf/

Ethical Approval

This study was performed in line with the principles of the Declaration of Helsinki. All procedures mentioned and followed were approved by the Institutional Review Board of the Faculty of Science of Charles University (protocol ref. number 2023/06). This study does not include information or images that could lead to the identification of any real person.

Authors’ contribution

OB generated artificial faces, and manually applied landmarks on facial configurations; KK provided natural faces, performed geometric morphometrics and statistical analyses. Both authors interpreted the results and wrote the article.

Conflict of interest statement

We have no known conflict of interest to disclose.

Adams DC, Otárola-Castillo E. 2013. Geomorph: An r Package for the Collection and Analysis of Geometric Morphometric Shape Data. Methods in Ecology and Evolution 4(4):393–99. https://doi.org/10.1111/2041-210X.12035

Adams D, Collyer M, Kaliontzopoulou A, Baken E. 2023. Geomorph: Geometric Morphometric Analyses of 2D and 3D Landmark Data. Available at: https://cran.r-project.org/web/packages/geomorph/geomorph.pdf

Anantrasirichai N, Bull D. 2022. Artificial Intelligence in the Creative Industries: A Review. Artificial Intelligence Review 55(1):589–656. https://doi.org/10.1007/s10462-021-10039-7

Baken E, Collyer M, Kaliontzopoulou A, Adams D. 2021. Geomorph v4.0 and gmShiny: Enhanced Analytics and a New Graphical Interface for a Comprehensive Morphometric Experience. Methods in Ecology and Evolution 12. https://doi.org/10.1111/2041-210x.13723

Bray SD, Johnson SD, Kleinberg B. 2023. Testing Human Ability to Detect “Deepfake” Images of Human Faces. Journal of Cybersecurity 9(1):tyad011. https://doi.org/10.1093/cybsec/tyad011

Cetinic E, She J. 2022. Understanding and Creating Art with AI: Review and Outlook. ACM Transactions on Multimedia Computing, Communications, and Applications 18(2):66:1-66:22. https://doi.org/10.1145/3475799

Courset R, Rougier M, Palluel-Germain R, Smeding A, Manto Jonte J, Chauvin A, et al. 2018. The Caucasian and North African French Faces (CaNAFF): A Face Database. 31(1):22. https://doi.org/10.5334/irsp.179

Geller Tom. 2008. Overcoming the Uncanny Valley. IEEE Computer Graphics and Applications 28(4):11–17. https://doi.org/10.1109/MCG.2008.79

Karras T, Laine S, Aila T. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. Available at: https://openaccess.thecvf.com/content_CVPR_2019/papers/Karras_A_Style-Based_Generator_Architecture_for_Generative_Adversarial_Networks_CVPR_2019_paper.pdf

Kleisner K. 2021. Morphological Uniqueness: The Concept and Its Relationship to Indicators of Biological Quality of Human Faces from Equatorial Africa. Symmetry 13(12):2408. https://doi.org/10.3390/sym13122408

Kleisner K, Pokorný Š, Saribay SA. 2019. Toward a New Approach to Cross-Cultural Distinctiveness and Typicality of Human Faces: The Cross-Group Typicality/ Distinctiveness Metric. Frontiers in Psychology 10. https://doi.org/10.3389/fpsyg.2019.00124

Kleisner K, Tureček P, Roberts SC, Havlíček J, Valentova JV, Akoko RB, et al. 2021. How and Why Patterns of Sexual Dimorphism in Human Faces Vary across the World. Scientific Reports 11(1):5978. https://doi.org/10.1038/s41598-021-85402-3

Kleisner K, Tureček P, Saribay S, Pavlovič O, Leongómez J, Roberts S, et al. 2023. Distinctiveness and Femininity, Rather than Symmetry and Masculinity, Affect Facial Attractiveness across the World. Evolution and Human Behavior. https://doi.org/10.1016/j.evolhumbehav.2023.10.001

Lago F, Pasquini C, Böhme R, Dumont H, Goffaux V, Boato G. 2022. More Real Than Real: A Study on Human Visual Perception of Synthetic Faces [Applications Corner]. IEEE Signal Processing Magazine 39(1):109–16. https://doi.org/10.1109/MSP.2021.3120982

Lakshmi A, Wittenbrink B, Correll J, Ma DS. 2021. The India Face Set: International and Cultural Boundaries Impact Face Impressions and Perceptions of Category Membership. Frontiers in Psychology 12. https://doi.org/10.3389/fpsyg.2021.627678

Liefooghe B, Oliveira M, Leisten LM, Hoogers E, Aarts H, Hortensius R. 2023. Are Natural Faces Merely Labelled as Artificial Trusted Less? Collabra: Psychology 9(1):73066. https://doi.org/10.1525/collabra.73066

Linke LS. Saribay A, Kleisner K. 2016. Perceived Trustworthiness Is Associated with Position in a Corporate Hierarchy. Personality and Individual Differences 99:22–27. https://doi.org/10.1016/j.paid.2016.04.076

Ma DS, Correll J, Wittenbrink B. 2015. The Chicago Face Database: A Free Stimulus Set of Faces and Norming Data. Behavior Research Methods 47(4):1122–35. https://doi.org/10.3758/s13428-014-0532-5

Marcus G, Davis E, Aaronson S. 2022. A Very Preliminary Analysis of DALL-E 2. available at: https://www.researchgate.net/publication/360311114_A_very_preliminary_analysis_of_DALL-E_2

Miller EJ, Steward BA, Witkower Z, Sutherland CAM, Krumhuber EG, Dawel A. 2023. AI Hyperrealism: Why AI Faces Are Perceived as More Real Than Human Ones. Psychological Science 34(12):1390–1403. https://doi.org/10.1177/09567976231207095

Moshel ML, Robinson AK, Carlson TA, Grootswagers T. 2022. Are You for Real? Decoding Realistic AI-Generated Faces from Neural Activity. Vision Research 199:108079. https://doi.org/10.1016/j.visres.2022.108079

Mustak M, Salminen J, Mäntymäki M, Rahman A, Dwivedi YK. 2023. Deepfakes: Deceptions, Mitigations, and Opportunities. Journal of Business Research 154:113368. https://doi.org/10.1016/j.jbusres.2022.113368

Nightingale SJ, Farid H. 2022. AI-Synthesized Faces Are Indistinguishable from Real Faces and More Trustworthy. Proceedings of the National Academy of Sciences 119(8):e2120481119. https://doi.org/10.1073/pnas.2120481119

Pasquini C, Laiti F, Lobba D, Ambrosi G, Boato G, De Natale F. 2023. Identifying Synthetic Faces through GAN Inversion and Biometric Traits Analysis. Applied Sciences 13(2):816. https://doi.org/10.3390/app13020816

Rossi S, Kwon Y, Auglend OH, Mukkamala RR, Rossi M, Thatcher J. 2022. Are Deep Learning-Generated Social Media Profiles Indistinguishable from Real Profiles? Available at: https://www.researchgate.net/publication/363584758_Are_Deep_Learning-Generated_Social_Media_Profiles_Indistinguishable_from_Real_Profiles

Saribay SA, Biten AF, Meral EO, Aldan P, Třebický V, Kleisner K. 2018. The Bogazici Face Database: Standardized Photographs of Turkish Faces with Supporting Materials. PLOS ONE 13(2):e0192018. https://doi.org/10.1371/journal.pone.0192018

Schlager S. 2017. Chapter 9 – Morpho and Rvcg – Shape Analysis in R: R-Packages for Geometric Morphometrics, Shape Analysis and Surface Manipulations. In: G. Zheng, S. Li, and G. Székely. Statistical Shape and Deformation Analysis. Academic Press. Pp. 217–56.

Sofer C, Dotsch R, Wigboldus DHJ, Todorov A. 2015. What Is Typical Is Good: The Influence of Face Typicality on Perceived Trustworthiness. Psychological Science 26(1):39–47. https://doi.org/10.1177/0956797614554955

Tucciarelli R, Vehar N, Chandaria S, Tsakiris M. 2022. On the Realness of People Who Do Not Exist: The Social Processing of Artificial Faces. iScience 25(12):105441. https://doi.org/10.1016/j.isci.2022.105441

Wong AD. 2022. BLADERUNNER: Rapid Countermeasure for Synthetic (AI-Generated) StyleGAN Faces. https://doi.org/10.48550/arXiv.2210.06587